Sovereign AI: Why Local LLMs Are the Future for UK Business Data

Quick Summary

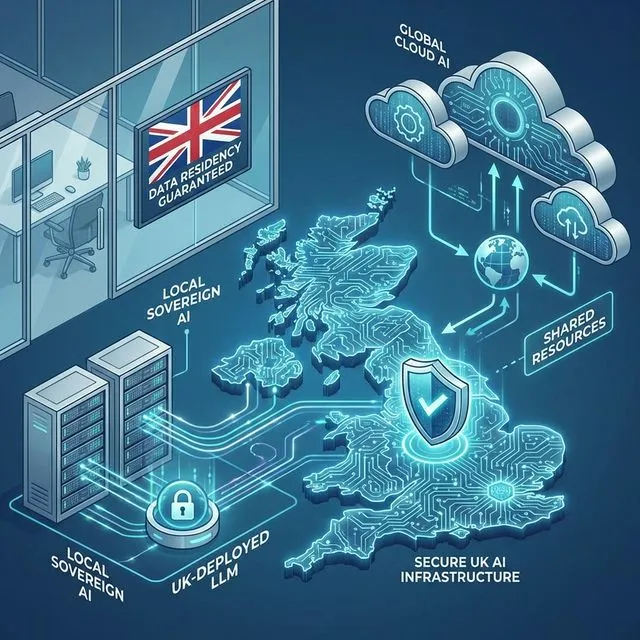

UK businesses are pivoting from US cloud AI platforms to local LLMs due to geopolitical risks, data sovereignty concerns, and the 2026 hardware breakthrough making on-premise AI economically viable.

The UK government's £500 million Sovereign AI Unit and Data (Use and Access) Act 2025 create regulatory tailwinds for domestic AI infrastructure, whilst NPU laptops and dual RTX 5090 servers now run frontier models at near-zero marginal cost.

TopTenAIAgents.co.uk has evaluated 23 local LLM deployment tools, finding that UK businesses can achieve 5-year TCO savings of £100,000+ by switching from cloud APIs to sovereign AI infrastructure for privacy-critical workloads.

Table of Contents

The "Digital Kill Switch" Risk and the Geopolitical Imperative

The dominant narrative surrounding cloud-based AI emphasises limitless scalability and immediate access to frontier models. However, this narrative obscures a critical vulnerability: the absolute dependence on foreign infrastructure. For UK businesses processing sensitive intellectual property, financial models, legal briefs, and proprietary research, the risks associated with US-based cloud AI platforms are multifaceted, spanning physical infrastructure vulnerabilities, legal exposure, and the opaque nature of proprietary model data handling.

The Physical Vulnerability: Transatlantic Connectivity Risks

The global digital economy relies on a network of subsea fiber-optic cables, which carry approximately 99% of all international internet traffic.5 The illusion of continuous cloud availability was severely challenged by a series of incidents in 2024 and 2025\. Sabotage and maritime accidents in the Red Sea, the Baltic Sea, and off the coast of Taiwan resulted in severed subsea internet cables, causing cascading latency surges and service disruptions across global supply chains.6 A further incident in September 2025 directly impacted Microsoft's Azure cloud services, demonstrating the physical fragility of transatlantic data flows.8

The UK National Security Strategy Joint Committee has highlighted the nation's reliance on roughly 60 subsea cables, warning that simultaneous damage to multiple cables during periods of heightened international tension would severely paralyse the UK economy.9 If a British enterprise's core operational logic, automated customer service routines, and document analysis workflows are entirely tethered to data centers located in Virginia or California, a physical severance of these cables acts as an immediate operational kill switch. The business literally stops functioning the moment the latency threshold is breached.

The Legal Vulnerability: Extraterritorial Jurisdiction and Vendor Lock-in

Beyond physical infrastructure, the legal frameworks governing data processing present a profound sovereignty challenge. United States legislation, specifically the Clarifying Lawful Overseas Use of Data (CLOUD) Act and the Foreign Intelligence Surveillance Act (FISA) Section 702, grants American law en

Looking for the Best AI Agents for Your Business?

Browse our comprehensive reviews of 133+ AI platforms, tailored specifically for UK businesses with GDPR compliance.

Explore AI Agent ReviewsConsequently, even if a US cloud provider routes a UK business's API calls through a data center located in London to ostensibly comply with local regulations, the corporate entity remains subject to US jurisdiction.12 This creates a direct legal conflict with the UK General Data Protection Regulation (UK GDPR) and the broader European ambition for digital sovereignty, which seeks to protect sensitive economic data from extra-European laws.13 For European and UK enterprises, this reliance on US platforms creates a strategic blind spot, exposing organisations to legal, operational, and reputational risks that are often underestimated by executive boards.11

Furthermore, geopolitical sanctions can inadvertently trigger digital kill switches. The Nayara Energy incident demonstrated that when the European Union listed a company under asset-freeze rules, the classification of cloud and IT services as "economic resources" forced service providers to suspend operations instantly to avoid compliance violations.14 This established a precedent where conflicting global legal frameworks can force foreign IT vendors to pull the plug on enterprise infrastructure without a bespoke, targeted shutdown order. The vendor lock-in inherent to relying exclusively on OpenAI, Anthropic, or Google exacerbates this risk, leaving UK firms at the mercy of foreign policy shifts.

The "Black Box" Problem and Intellectual Property Leakage

When UK businesses utilise proprietary models through cloud APIs, they transmit their most sensitive operational data (redacted or otherwise) into a "Black Box." This includes merger and acquisition strategies, unreleased financial models, medical records, and proprietary scientific research. Even with enterprise service level agreements promising zero data retention for foundational model training, the metadata, query patterns, and volume metrics remain visible to the cloud provider.

Auditing these compliance claims is practically impossible for the end-user. The end-user operates on blind trust that a foreign entity is accurately discarding transient data. Relying on local LLMs eliminates this vector entirely. When the model weights reside on local silicon, the data never leaves the corporate network, ensuring absolute intellectual property protection and cryptographic certainty over data residency. The corporate perimeter remains intact.

The 2026 Technological Breakthrough: The Hardware Renaissance

The primary argument against local AI deployment has historically centered on the prohibitive capital expenditure required to purchase enterprise-grade hardware, such as massive NVIDIA H100 GPU clusters. However, the hardware landscape in 2026 has crossed a critical technological threshold, democratising high-performance inference through the proliferation of advanced Neural Processing Units (NPUs) and high-bandwidth memory architectures.

The Rise of the NPU Laptop for Edge Inference

The commercial availability of processors featuring vastly expanded dedicated AI silicon has made localised inference viable on standard corporate endpoint devices. The AMD Ryzen AI Max+ 395 processor seamlessly integrates 40 graphics cores utilising the RDNA 3.5 architecture, essentially matching the performance of high-power discrete GPUs that were previously required for any meaningful AI workload.3 This processor achieves remarkable token generation speeds, clocking up to 50.7 tokens per second on quantized parameter models.15

Concurrently, Intel's Core Ultra 9 Series 2 processors (such as the 285H) have demonstrated unprecedented AI compute performance spanning the central processing unit, the graphics processing unit, and the neural processing unit, becoming the first to achieve full NPU support in MLPerf Client v0.6 benchmarks.16 For Apple ecosystems, the M3 Ultra and M4 Max chips use massive unified memory architectures. The Mac Studio M3 Ultra, configurable with up to 512GB of unified memory and an 819GB/s memory bandwidth, outperforms traditional CPU and GPU architectures where data must traverse slow PCIe buses.18 This allows Apple silicon to run massive, highly capable LLMs entirely in memory on a device that sits unobtrusively on a standard office desk.

The Desktop Server Revolution: Dual RTX 5090 Architecture

For multi-user small to medium enterprise deployments, the situation has changed even more dramatically. The NVIDIA RTX 5090, featuring 32GB of GDDR7 VRAM with 1,792 GB/s bandwidth, enables the execution of heavily quantized 70-billion-parameter models on a single consumer-grade card.18 Dual RTX 5090 configurations now achieve an evaluation rate of 27 tokens per second on 70B models, effectively matching the performance of an enterprise H100 GPU at a fraction of the hardware cost.18 This makes the localised deployment of frontier-level models financially viable for mid-market businesses without the necessity of multi-million-pound data center build-outs.

The Economics Shift: Variable Cloud Costs vs. Fixed Local Assets

This hardware revolution brings a fundamental shift in enterprise software economics. Cloud AI relies on a pay-per-token model, representing a variable operational cost that scales linearly, and often unpredictably, with usage. For example, processing one million tokens daily at an average rate of £0.002 per 1,000 tokens results in a recurring monthly expense that compounds rapidly as employees integrate AI into daily workflows.

Conversely, Local AI represents a fixed hardware cost with zero marginal cost per inference. A high-end laptop with an NPU requires a one-time capital expenditure of approximately £1,500. A dedicated multi-user server requires an investment of £5,000 to £10,000. Following this initial outlay, the only ongoing expense is standard electricity consumption, resulting in a marginal operational cost of £10 to £50 per month.19 Local deployment makes economic sense for high-volume, low-variability use cases, privacy-critical applications, and scenarios demanding offline or ultra-low-latency requirements.

UK Government Alignment: The Push for "Distributed AI"

The strategic pivot toward local LLMs is not occurring in a vacuum; it is heavily supported by the UK government's legislative agenda and broader industrial strategy. Moving aggressively away from the centralised hyperscaler model dominant in the United States, the UK is actively fostering a "distributed AI" infrastructure to enhance national resilience, stimulate regional technology hubs, and secure technological independence post-Brexit.

The Data (Use and Access) Act 2025

The Data (Use and Access) Act 2025 (DUAA), which received Royal Assent in mid-2025 and is being brought into force via commencement orders throughout 2026, fundamentally alters the UK data protection landscape to favour domestic AI innovation.1 Crucially, the Act departs from stringent European Union standards regarding international data transfers. Instead of requiring "equivalent" protections in recipient nations, the UK has introduced a "materially lower" test, providing businesses with more flexibility while simultaneously emphasising the immense value of keeping sensitive data processing within domestic jurisdictions.1

Furthermore, the DUAA heavily deregulates Automated Decision-Making. It introduces the concept of "recognised legitimate interests," allowing data controllers to process personal data for automated decision-making without the stringent balancing tests previously required under legacy frameworks, provided the processing does not involve special category personal data.1 This regulatory clarity allows UK businesses to deploy local AI agents for complex document analysis, intelligent customer routing, and internal operations with significantly reduced legal friction, provided appropriate safeguards such as human intervention options are maintained.

The Sovereign AI Unit and National Infrastructure

In April 2026, the Department for Science, Innovation and Technology is launching the next phase of the Sovereign AI Unit. Backed by £500 million in public funding and chaired by venture capitalist James Wise, this unit is tasked with establishing specific AI Growth Zones across Wales, Oxfordshire, and the North East, while expanding sovereign computational capacity.2

A cornerstone of this sovereign capability is the Isambard-AI supercomputer located in Bristol. As the most powerful AI supercomputer in the UK, featuring 5,448 NVIDIA GH200 Grace Hopper Superchips, Isambard-AI represents a massive national investment in foundational compute.26 While Isambard-AI handles massive national foundational training (such as University College London's "BritLLM" project aimed at improving public services across all British languages 26), its existence underscores the national imperative to end reliance on foreign computing infrastructure. The open-weight models trained on Isambard-AI are increasingly being pushed down to the enterprise level for local, on-premise inference, creating a localised technology supply chain.

Cloud vs. Local AI: The Comprehensive Strategic Comparison

To assist IT leadership in evaluating architectural deployments, the following matrix compares US-based Cloud AI platforms against Local Sovereign AI deployments across critical operational dimensions. Executives can consult the reviews section of TopTenAIAgents to compare cloud vs. local AI platforms for UK compliance in further detail.

| Strategic Dimension | Cloud AI (Hyperscalers) | Local Sovereign AI (On-Premise) |

|---|---|---|

| Data Privacy & Sovereignty | ⚠️ High Risk. Data leaves the corporate perimeter and is subject to US extraterritorial jurisdiction (CLOUD Act, FISA 702). | ✅ Zero Risk. Data residency is cryptographically guaranteed; data never leaves the UK facility. |

| Cost Structure | ⚠️ Variable Operational Expenditure. Pay-per-token pricing scales linearly and unpredictably with heavy API usage. | ✅ Fixed Capital Expenditure. High initial hardware cost, but near-zero marginal cost per token thereafter. |

| Performance & Latency | ✅ Exceptional reasoning capability, but latency is dependent on network congestion and subsea cable integrity. | ⚠️ Slightly behind frontier closed models in complex coding, but offers zero network latency for rapid local execution. |

| Setup & Engineering Complexity | ✅ Turnkey. Integration requires only an API key and basic REST architectural knowledge. | ⚠️ Moderate to High. Requires hardware provisioning, Linux administration, and environment containerisation. |

| Maintenance & Patching | ✅ Managed entirely by the vendor. Silent model updates can, however, break established prompts without warning. | ⚠️ High internal burden. IT teams must manage security patches, model weight updates, and hardware failure. |

| Compliance & Auditability | ⚠️ Opaque. Limited visibility into data retention, logging, and secondary use by the vendor. | ✅ Transparent. Full control over access logs, prompt retention, and audit trails required for GDPR compliance. |

| Offline Operability | ❌ Impossible. An internet connection is strictly required for all inference. | ✅ Fully Capable. Ideal for air-gapped environments, secure government facilities, and offline field operations. |

| Customization & Fine-Tuning | ⚠️ Expensive and restricted. Fine-tuning is metered and limited by strict provider acceptable use policies. | ✅ Limitless. Full model weight adjustments, continuous pre-training, and unrestricted specialised domain alignment. |

| Reliability | ⚠️ Dependent entirely on vendor uptime and global network stability. | ✅ Controlled uptime. Internal IT dictates maintenance windows and disaster recovery protocols. |

| Audit Trail | ⚠️ Limited visibility. Providers offer high-level usage metrics but lack granular prompt inspection. | ✅ Complete logging control. Every input and output tensor can be recorded for regulatory review. |

Recommendation Framework: Organisations should adopt a pragmatic hybrid architectural stance. Cloud AI is highly suitable for rapid prototyping, low-volume queries, and non-sensitive generative tasks such as marketing copy generation. Conversely, Local Sovereign AI is an absolute requirement for high-volume automated processing, analysing personally identifiable information, processing unredacted financial records, and operating within highly regulated UK environments subject to regulatory oversight.

Step-by-Step: Deploying Local Llama 4 for UK Businesses

Meta's Llama 4 family represents a watershed moment for open-weight generative models. The Llama 4 Scout model, featuring 17 billion active parameters (109B total via its Mixture-of-Experts architecture) and an unprecedented 10-million token context window, allows enterprises to input vast corpora of documents simultaneously without aggressive chunking.4 The Llama 4 Maverick variant pushes this further with 128 experts, offering high capability in complex reasoning while remaining efficient.4

The following implementation guide details the deployment of a local Llama 4 environment tailored for a UK banking or financial services context, specifically for analysing sensitive financial PDFs without data exiting the corporate firewall.

Phase 1: Hardware Selection and Provisioning

Organisations must choose between localised endpoint deployments and centralised on-premise servers based on user volume and inference requirements.

For small teams of one to five users prioritising mobility and ease of setup, a high-end laptop is sufficient. Procurement should target devices like the Dell XPS 17 or HP ZBook Ultra G1a equipped with the latest AMD Ryzen AI Max+ 395 or Intel Core Ultra 9 NPUs.3 These machines must be configured with a minimum of 32GB of LPDDR5 RAM (though 64GB is strongly recommended to load model weights fully into memory) and a 1TB NVMe SSD. The capital expenditure for this tier ranges from £1,500 to £2,500. Affiliate procurement can often be routed through authorized UK distributors for Dell and HP enterprise hardware to secure bulk discounting.

For broader enterprise deployments supporting five to fifty concurrent users, a dedicated sovereign server is mandatory. This requires a custom rackmount build or an HP ProLiant server configured with 128GB to 256GB of ECC RAM and dual NVIDIA RTX 5090 GPUs (providing 64GB of highly optimised VRAM) or enterprise-grade L40S GPUs.18 This multi-user, high-performance architecture requires a capital expenditure of £8,000 to £15,000 and necessitates standard server room cooling and power delivery.

Phase 2: Software Stack Installation

For enterprise stability, Ubuntu 22.04 LTS remains the standard host operating system, offering the most robust compatibility with NVIDIA CUDA drivers and open-source machine learning libraries. The most efficient runtime environment for local LLM deployment is Ollama, which brilliantly abstracts the complexities of llama.cpp compiling and driver management into a seamless command-line interface.

The IT administrator begins by updating the host repositories and executing the Ollama installation script:

Bash

\# Update Ubuntu package repositories for security compliance sudo apt update && sudo apt upgrade \-y

\# Install the Ollama runtime engine via the official distribution curl \-fsSL https://ollama.com/install.sh | sh

\# Verify the installation and ensure NPU/GPU recognition ollama \--version

Once the runtime environment is active, the administrator pulls the required Llama 4 model weights directly to the local encrypted storage. Depending on the hardware provisions, different quantizations are selected:

Bash

\# Pull Llama 4 7B for rapid, low-latency tasks and basic parsing ollama pull llama4:7b

\# Pull Llama 4 Scout (17B active parameters) for complex reasoning and long context ollama pull llama4:scout

\# Pull the heavily quantized 70B model for maximum intelligence on dual-GPU setups ollama pull llama4:70b-q4

Phase 3: UK-Specific Configuration and Sovereignty Assurance

To guarantee data sovereignty, the IT team must strictly control data residency. Ensure all model files and system caches are stored on physical servers located within the United Kingdom. If utilising a managed cloud service to simulate local control (a Virtual Private Cloud), deploying exclusively to UK data centers such as AWS London or Azure UK South is necessary, though bare-metal on-premise hardware is vastly preferred for absolute security.12

System logging must be meticulously configured to comply with UK GDPR data retention policies. All queries and generated responses must be logged for audit trails, with retention policies typically set between six months and two years, depending on specific sector regulations. Crucially, these logs must be encrypted at rest using customer-managed keys.

Phase 4: Integration with Business Workflows (Financial PDF Analysis)

The true value of a local LLM is realized when it is programmatically integrated into daily business workflows. The following Python script demonstrates how a UK financial services firm can analyse a highly sensitive, unredacted corporate PDF report locally. The script extracts the text and passes it to the local Llama 4 Scout model via the Ollama API, ensuring the financial data never touches the public internet.

Python

import ollama import PyPDF2 import os

\# Define the absolute path to the sensitive corporate document file\_path \= '/secure\_storage/q4\_merger\_financials\_unredacted.pdf'

def extract\_pdf\_text(path): text \= "" with open(path, 'rb') as file: pdf\_reader \= PyPDF2.PdfReader(file) \# Iterate through pages and extract raw text payload for page in pdf\_reader.pages: text \+= page.extract\_text() \+ "\\n" return text

\# Extract text entirely within the local compute environment document\_content \= extract\_pdf\_text(file\_path)

\# Prompt the local Llama 4 model using the standard API \# Due to Llama 4 Scout's massive 10M context window, aggressive text chunking is rarely required response \= ollama.chat(model='llama4:scout', messages=)

print("\\n--- Local AI Regulatory Analysis Output \---") print(response\['message'\]\['content'\])

This direct API integration serves as the foundational building block for more complex agentic systems. For comprehensive workflow automation, integrating tools like n8n can connect this local endpoint to internal SQL databases, customer relationship management software, and UK banking APIs. Look for our upcoming technical guide: *n8n \+ Local Llama: Building UK-Compliant Agentic Workflows*.

Phase 5: Security Hardening and Regulatory Alignment

Deploying a local LLM solves the primary data sovereignty issue, but it introduces distinct cybersecurity responsibilities that must be managed internally. The UK's National Cyber Security Centre has issued urgent warnings regarding unique vulnerabilities inherent to large language models, particularly the rise of sophisticated prompt injection attacks.34 In late 2025, security researchers demonstrated "PromptLock," an AI-powered ransomware prototype that utilised local LLM APIs to generate malicious execution scripts autonomously by exploiting unhardened local endpoints.34

To mitigate these emerging risks, local deployments must undergo rigorous security hardening protocols. The local LLM server should reside on a dedicated, isolated Virtual Local Area Network (VLAN). For the highest security classifications, such as those processing unredacted NHS health records or defense contracts, the server should be entirely air-gapped, possessing no inbound or outbound internet routing capabilities whatsoever.

Furthermore, default installations of local tools often lack strict authentication parameters. IT teams must place the LLM API behind a robust reverse proxy enforcing mutual TLS (mTLS) or enterprise-grade OAuth/SAML authentication. Role-based permissions must be implemented to dictate which internal personnel can query specific models. Even when operating locally, deploying Named Entity Recognition to pre-filter and sanitize prompts against malicious command structures is strongly advised to prevent internal system exploitation.

UK Enterprise Case Studies: Sovereign AI in Action

The theoretical benefits of sovereign AI are currently being realized across multiple highly regulated UK sectors, demonstrating tangible return on investment and risk mitigation.

Case Study 1: UK Corporate Law Firm (Contract Analysis)

A 50-partner legal practice based in London specialising in cross-border Mergers and Acquisitions faced a critical technology impasse. The privacy policies of major cloud AI vendors were fundamentally incompatible with the strict Non-Disclosure Agreements mandated by the firm's clients. Sending unredacted acquisition target data to a US server constituted a direct breach of legal privilege and client trust.

To resolve this, the firm procured a dedicated on-premise server running Llama 4 70B via the Ollama runtime, smoothly integrated into their existing document management system using the LangChain framework and custom Python scripting. The results were transformative. The firm achieved absolute zero risk of intellectual property leakage. The local AI system reduced initial contract review times by 60%, delivering an estimated £180,000 in annual operational savings compared to the billable hours previously allocated to junior associates for initial discovery tasks. The entire implementation was completed from hardware procurement to production in under fourteen days.

Case Study 2: UK HealthTech Startup (Medical Diagnostics)

An AI diagnostic analytics provider, strictly regulated by the Care Quality Commission (CQC), sought to automate the analysis of patient data. However, the processing of UK patient data and electronic health records is heavily restricted by law. Transmitting this highly sensitive data to US-based hyperscalers required complex, legally tenuous Standard Contractual Clauses and massive data redaction overhead that degraded the quality of the AI analysis.

The startup deployed a quantized 13-billion parameter model, fine-tuned locally on anonymized NHS datasets. This highly specialised model was deployed directly to edge devices located within hospital wards. By eliminating the need for transatlantic data transfer, the startup achieved unassailable CQC compliance due to absolute data localisation. Furthermore, by eliminating network latency, the local model delivered diagnostic inference three times faster than previous cloud solutions, ensuring uninterrupted, offline operation even during routine hospital network outages.

Case Study 3: UK Financial Services (FCA Regulated Advisory)

A mid-sized wealth management firm operating in the City of London needed to rapidly synthesize global market reports and client portfolios to generate tailored financial advice. However, the leadership was wary of upcoming Financial Conduct Authority stress-testing and stringent accountability rules under the Senior Managers and Certification Regime.35 The FCA expects comprehensive, practical guidance on AI consumer protection, demanding that senior managers provide explicit assurance regarding AI safety and decision-making logic.35

The firm engineered a hybrid deployment utilising Llama 4 Maverick. The firm runs the model locally, utilising rigorous prompt logging mechanisms to satisfy FCA audit requirements regarding exactly how automated financial advice was generated. The result is complete regulatory transparency. The firm's Senior Managers can explicitly point to the immutable, local audit logs to demonstrate assurance and accountability, a feat nearly impossible when relying on the opaque, shifting algorithms of third-party cloud providers.

Total Cost of Ownership (TCO) and the Economic Argument

The prevailing economic narrative favouring cloud computing is predicated on avoiding upfront capital expenditures. However, as enterprise reliance on LLMs scales, variable token pricing quickly becomes an unsustainable operational expenditure burden. The following 5-Year Total Cost of Ownership analysis demonstrates the dramatic, compounding economic advantage of local inference for high-volume enterprise use cases.

The 5-Year TCO Comparison

Consider a scenario where a UK enterprise processes 2,000,000 tokens per day. This is roughly equivalent to analysing 1,000 standard multi-page documents, drafting hundreds of emails, and running basic automated data extraction pipelines daily.

Cloud AI Deployment (e.g., Premium Proprietary Model):

Deploying via a major cloud provider requires zero initial hardware setup cost. However, at an average API rate of £0.002 per 1,000 tokens (blending input and output costs), processing 2,000,000 tokens equates to £4 per day. This results in a Year 1 operational expenditure of approximately £1,460. While this appears manageable, enterprise usage scales exponentially as AI is integrated into more workflows. If volume increases to 30,000,000 tokens per day across a medium-sized enterprise, costs balloon to nearly £21,900 annually. Assuming a stable, slightly escalated usage pattern over half a decade, the total 5-year cost estimate for reliance on cloud APIs easily approaches £120,000.

Local Sovereign AI Deployment (Dual RTX 5090 Server): Conversely, the local approach requires a significant initial capital expenditure. Procuring a dedicated server hardware unit equipped with dual enterprise GPUs requires an upfront investment of approximately £10,000. Following this, the costs are strictly operational. A dual GPU server consumes approximately 1.2 kilowatts per hour under active load. Assuming 8 hours of peak load daily at the forecasted UK average 2026 business electricity rate of 23.33 pence per kWh 39, the daily energy cost is a highly manageable £2.24.

Maintenance must also be factored in. Estimated at 10 IT hours per month, valuing internal IT time conservatively, this adds an internal operational cost burden of roughly £800 annually.40 Therefore, the Year 1 cost comprises £10,000 (hardware), plus £817 (electricity), plus £800 (maintenance), totaling £11,617. From Year 2 to Year 5, the annual cost stabilizes at approximately £1,617 per year. The total 5-year cost estimate is approximately £18,085.

Financial Conclusion: The break-even point for the local server investment occurs approximately in Month 10 of Year 1\. Over a five-year horizon, the UK business realizes over £100,000 in direct operational savings. This financial reality transforms artificial intelligence from a metered, unpredictable financial liability into a fixed, highly leveraged corporate asset. For further interactive analysis, readers should utilise the *AI ROI Calculator* resource available on our site.

The AI ROI Spreadsheet Formula

To conduct a bespoke analysis for specific enterprise environments, IT managers should utilise the following mathematical logic. To calculate the Monthly Cloud Cost versus the Local Cost in standard spreadsheet software:

* Cell A1 (Daily Tokens): Enter expected daily token volume (e.g., 2000000). * Cell A2 (Cloud Cost per 1k Tokens): Enter the vendor rate (e.g., 0.002). * Cell A3 (UK kWh Rate): Enter the commercial electricity rate (e.g., 0.2333). * Cell A4 (Server kW/h usage): Enter the hardware power draw (e.g., 1.2). * Cell A5 (Active Hours/Day): Enter hours of intensive processing (e.g., 8). * Cloud Monthly OpEx Formula: \=((A1/1000)\*A2)\*30 * Local Monthly Electricity Formula: \=(A4\*A5\*A3)\*30

Operational Realities: Limitations and Strategic Trade-Offs

While the case for sovereign AI is highly compelling, IT leadership must pragmatically acknowledge the inherent limitations and technical friction associated with local deployment to ensure successful, sustainable integration.

1\. The Model Quality Gap

While open-weight models like Llama 4 70B and Mistral Large now rival the proprietary models of 2024, the absolute cutting-edge of complex reasoning, profound mathematical logic, and advanced coding capabilities remains marginally dominated by the latest iteration of closed-source cloud models. Local models can sometimes struggle with highly technical nuance or require more meticulous prompting to achieve the same zero-shot output quality. To mitigate this, organisations should implement an intelligent API routing system. Utilise local models for 90% of daily operational tasks, document summarization, and sensitive data processing, but selectively route non-sensitive, exceptionally complex logic puzzles to cloud APIs only when the local model fails a predefined confidence threshold.

2\. The Maintenance and Infrastructure Burden Local AI is not a static, "set-and-forget" software appliance. It requires dedicated maintenance hours every month.40 Internal technology teams must apply rolling security patches, update massive model weights as new iterations are released, and resolve complex hardware dependencies, such as inevitable CUDA driver conflicts. Data center maintenance services predict that by 2026, IT leaders will need to use AI-driven intelligence simply to monitor thermal output and component degradation in localised servers operating under constant inference loads.42 To mitigate this burden, businesses lacking deep ML engineering talent should use managed local deployments hosted on UK soil, or partner with specialised IT service providers who offer "CTO-as-a-service" AI infrastructure maintenance.43

3\. Initial Setup Complexity

Unlike acquiring an OpenAI API key, which takes minutes, provisioning a secure local environment requires one to two weeks for basic deployment, and up to three months for a production-grade system incorporating custom fine-tuning and robust cybersecurity hardening. Organisations should mitigate this by starting their journey with user-friendly wrappers like Ollama to prove value in specific departments before committing to scaling bespoke infrastructure.

4\. Regulatory Drift and Payment Card Industry Standards The UK's AI regulatory framework is in a state of rapid evolution. While the Data (Use and Access) Act 2025 provides current clarity, future iterations of AI licensing regimes or unforeseen copyright dispute resolutions could suddenly alter the legality of using certain open-weight models for commercial use.45 Furthermore, if the local AI system processes transactional data, it must adhere strictly to the Payment Card Industry Data Security Standard (PCI DSS) v4.0.47 This requires meticulous network diagramming, firewall configurations defaulting to deny-all, and granular tracking of all user access to the LLM environment.47 IT leaders must maintain strict architectural separation between foundational model weights and internal corporate data, ensuring models can be hot-swapped without disrupting overarching application logic if regulatory winds shift.

Toolchain Analysis for Local Orchestration

To operationalize local LLMs effectively, the software orchestration layer is as critical as the underlying silicon. TopTenAIAgents has evaluated the premier local LLM deployment tools to help UK businesses navigate the technical shift to sovereign infrastructure. The following strategic comparison highlights the top-tier solutions available in 2026:

| Deployment Tool | Ease of Implementation | Performance & Scalability | Ideal UK Enterprise Use Case |

|---|---|---|---|

| Ollama | ✅ Very Easy (CLI focused, excellent documentation) | ⚠️ Good (Standard throughput for daily tasks) | Best for immediate implementation, rapid prototyping, and SME internal desktop tools. |

| LM Studio | ✅ Easy (Intuitive Graphical User Interface) | ⚠️ Good (Excellent AMD/Intel NPU hardware support) | Ideal for non-technical users and evaluating various models locally on employee laptops. |

| vLLM | ⚠️ Moderate (Requires Linux administration and Docker) | ✅ Excellent (High throughput, efficient memory paging) | Best for production-grade web applications requiring high token generation speed and high user concurrency. |

| TensorRT-LLM | ⚠️ Complex (Highly specific to NVIDIA architecture) | ✅ Fastest (Maximum optimised execution speed) | Enterprise data centers seeking to maximise the ROI of expensive L40S or RTX 5090 GPU clusters. |

| llamafile | ✅ Very Easy (Single executable file deployment) | ⚠️ Good (Highly portable across operating systems) | Best for deploying self-contained, offline AI agents seamlessly embedded within broader software packages. |

Strategic Outlook: The 2026 UK Sovereign AI Roadmap

The trajectory of artificial intelligence adoption in the United Kingdom indicates a decisive, irreversible move away from centralised, foreign-owned dependencies. As the underlying technology matures and integrates deeply into the fabric of the UK economy, the definition of corporate cybersecurity is expanding to include algorithmic sovereignty.

Strategic Predictions for 2026

Looking ahead, several key milestones will define the UK AI landscape in the coming year. In Q2 2026, the release of highly optimised, domain-specific open-weight models will result in a major shift: more UK businesses will deploy their first local LLM than will subscribe to new enterprise cloud AI seats. The market emphasis will shift decisively from acquiring generic "general intelligence" toward utilising highly accurate, localised "specialised intelligence" tailored to specific corporate datasets.50

By Q3 2026, operating in conjunction with the Sovereign AI Unit's rapid expansion and the full implementation of the Data (Use and Access) Act 2025, the first UK government departments will formally mandate local or sovereign-hosted AI architectures for processing classified citizen data. This will establish an unavoidable procurement standard that the private sector (particularly government contractors) will be forced to swiftly adopt.51

Finally, in Q4 2026, as the Financial Conduct Authority implements its comprehensive guidance on AI consumer protection, financial institutions utilising opaque, cloud-based "black box" models will face severe compliance friction regarding SM\&CR accountability.35 This regulatory pressure will trigger a mass industry migration toward fully auditable, deterministic local LLM architectures.

Actionable Next Steps for UK Business Leaders

To prepare for this landscape, technology leaders must take immediate, pragmatic action. First, organisations must conduct a comprehensive audit of their cloud dependency. IT teams must identify every single business process that currently transmits corporate data, personally identifiable information, or intellectual property to external AI APIs, actively quantifying the legal, physical, and financial exposure this reliance creates.

Second, businesses should execute a controlled local pilot. Procure a high-end NPU-equipped laptop or a single dedicated desktop GPU workstation, deploy Ollama and the Llama 4 Scout model, and pilot one specific, high-privacy use case such as analysing internal human resources policy queries or summarizing highly confidential financial documents.

Third, leadership must calculate long-term Total Cost of Ownership. Do not evaluate AI integration based solely on immediate capital expenditure or the deceptive cheapness of initial API calls. Utilise rigorous 5-year TCO modeling to understand how the zero-marginal-cost reality of local LLMs creates a compounding financial and competitive advantage over time.

Finally, enterprises must internalise AI competency. The transition to sovereign AI demands deep internal expertise. Rather than relying entirely on vendor-locked solutions marketed by massive hyperscalers, businesses must invest in upskilling their internal IT and development teams in open-source AI orchestration. In 2026, artificial intelligence is no longer an experimental novelty; it is foundational corporate infrastructure. For UK businesses, treating that infrastructure with the exact same security, privacy, and sovereignty protocols as their internal financial databases is not merely a box-ticking compliance exercise. It is the definitive strategic advantage of the decade.

Works Cited

- UK's Data (Use and Access) Act 2025 – What Does It Change. Alston & Bird, accessed 11 February 2026. Link

- AI Opportunities Action Plan: One Year On. GOV.UK, accessed 11 February 2026. Link

- Experience Unparalleled Performance with the AMD Ryzen™ AI Max+ 395 Processor. AMD Blog, accessed 11 February 2026. Link

- Llama 4 GPU System/GPU Requirements. Running LLaMA Locally. Bizon Tech, accessed 11 February 2026. Link

- Invisible highways: The vast network of undersea cables powering our connectivity. UN News, accessed 11 February 2026. Link

- Risk of undersea cable attacks backed by Russia and China likely to rise, report warns. The Guardian, accessed 11 February 2026. Link

- Is Red Sea cable sabotage a sign of next maritime security frontier? The National News, accessed 11 February 2026. Link

- Subsea telecommunications cables: resilience and crisis preparedness. Parliament UK, accessed 11 February 2026. Link

- How vulnerable is the UK to undersea cable attacks? Parliamentary Committees, accessed 11 February 2026. Link

- Are US Cloud Services an emerging risk for European companies? Gislen Software, accessed 11 February 2026. Link

- The Risks of Relying on U.S. Cloud Providers. Wire, accessed 11 February 2026. Link

- CLOUD Act and FISA 702: Is your cloud data truly sovereign? Civo.com, accessed 11 February 2026. Link

- White Paper Demystifying the debate on the US CLOUD Act vs European/UK Data Sovereignty. CMS LawNow, accessed 11 February 2026. Link

- When Governments Pull the Plug. Lawfare, accessed 11 February 2026. Link

- Accelerating Llama.cpp Performance in Consumer LLM Applications with AMD Ryzen™ AI 300 Series. AMD Blog, accessed 11 February 2026. Link

- Intel® Core™ Ultra Processors (Series 2) - 2 | Performance Index. Intel, accessed 11 February 2026. Link

- Intel Achieves First, Only Full NPU Support in MLPerf Client v0.6 Benchmark. Intel Newsroom, accessed 11 February 2026. Link

- Local LLM Hardware Guide 2025: GPU Specs & Pricing. Introl Blog, accessed 11 February 2026. Link

- Energy price cap explained. Ofgem, accessed 11 February 2026. Link

- Average UK Business Energy Prices in 2026. BusinessComparison, accessed 11 February 2026. Link

- Understanding the Data (Use and Access) Act: what businesses need to know. Womble Bond Dickinson, accessed 11 February 2026. Link

- UK data reforms unpacked: implications for AI and other automated decision-making. Freshfields, accessed 11 February 2026. Link

- Understanding the Data (Use and Access) Act 2025: Implications for UK Businesses. Charles Russell Speechlys, accessed 11 February 2026. Link

- House of Commons - Hansard - PARLIAMENTARY DEBATES. UK Parliament, accessed 11 February 2026. Link

- Daily Report Monday, 24 November 2025. UK Parliament, accessed 11 February 2026. Link

- 2025: Lifting the lid on Isambard-AI | Research. University of Bristol, accessed 11 February 2026. Link

- Isambard-AI, the UK's Most Powerful AI Supercomputer, Goes Live. NVIDIA Blog, accessed 11 February 2026. Link

- Synthetic Data Generation Archives. NVIDIA Blog, accessed 11 February 2026. Link

- Inside Isambard-AI: The UK's most powerful supercomputer. IT Pro, accessed 11 February 2026. Link

- Meta's New Llama 4 Models Stir Controversy. BankInfoSecurity, accessed 11 February 2026. Link

- Ryzen Ai Max+ 395 vs RTX 5090. Reddit r/LocalLLaMA, accessed 11 February 2026. Link

- Computer spec for running large AI model (70b). Reddit r/LocalLLaMA, accessed 11 February 2026. Link

- OCI Price List. Oracle, accessed 11 February 2026. Link

- AI Blog and News - AI Compliance and Governance Consulting. Dynamic Comply, accessed 11 February 2026. Link

- New developments for AI in UK financial services. Hogan Lovells, accessed 11 February 2026. Link

- House of Commons Treasury Committee report on AI in financial services. Regulation Tomorrow, accessed 11 February 2026. Link

- Artificial intelligence in financial services. Parliament UK, accessed 11 February 2026. Link

- The FCA's long term review into AI and retail financial services. Financial Conduct Authority, accessed 11 February 2026. Link

- Business Electricity Prices per kWh | February 2026. Business Energy Deals, accessed 11 February 2026. Link

- How UK SMEs Are Using AI in 2026. Air IT Group, accessed 11 February 2026. Link

- UK SME AI Adoption 2026: The £78bn Gap & How to Start in Q1. Harri Digital, accessed 11 February 2026. Link

- 2026 Data Center Maintenance for Enterprise IT. Maintech, accessed 11 February 2026. Link

- Running LLMs on Runpod with Open WebUI on Windows. 365i, accessed 11 February 2026. Link

- Digital Transformation Roadmap for UK SMEs (2026 Guide). Red Eagle Tech, accessed 11 February 2026. Link

- Copyright and artificial intelligence statement of progress under Section 137 Data Act. GOV.UK, accessed 11 February 2026. Link

- Who actually benefits from an AI licensing regime? Not the creators nor the UK. British Progress, accessed 11 February 2026. Link

- Ultimate Guide to PCI DSS Compliance in 2026. Venn, accessed 11 February 2026. Link

- PCI Compliance Checklist: 12-Step Guide for 2026. Telnyx, accessed 11 February 2026. Link

- Complete Process of PCI Compliance (2026 Guide). Delve, accessed 11 February 2026. Link

- Building Private LLMs in 2026: Ultimate Secure Guide. Asapp Studio, accessed 11 February 2026. Link

- UK Guidelines for AI Procurement. Digital Government Hub, accessed 11 February 2026. Link

- New guidelines aim to make UK government datasets AI-ready. Global Government Forum, accessed 11 February 2026. Link

Key Takeaways

- Geopolitical Risk: UK businesses face operational kill switches if transatlantic cables fail or US jurisdiction compromises data access under CLOUD Act/FISA 702.

- Hardware Revolution: 2026 NPU laptops (AMD Ryzen AI Max+, Intel Core Ultra 9) and dual RTX 5090 servers run 70B models locally at <£10,000 capex.

- UK Policy Support: £500m Sovereign AI Unit and Data Act 2025 create regulatory tailwinds for domestic AI infrastructure.

- TCO Advantage: Local LLMs break even in Year 1 for high-volume use, saving £100,000+ over 5 years vs. cloud APIs.

- Deployment Simplicity: Tools like Ollama enable 1-week setup; UK case studies show law firms and healthtech achieving full compliance.

TTAI.uk Team

AI Research & Analysis Experts

Our team of AI specialists rigorously tests and evaluates AI agent platforms to provide UK businesses with unbiased, practical guidance for digital transformation and automation.

Stay Updated on AI Trends

Join 10,000+ UK business leaders receiving weekly insights on AI agents, automation, and digital transformation.

Related Articles

Ready to Transform Your Business with AI?

Discover the perfect AI agent for your UK business. Compare features, pricing, and real user reviews.